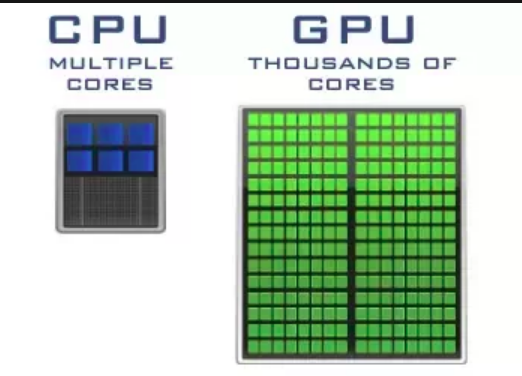

I think one of the promising technology in the next couple of years is the use of GPU for accelerating any kinds of job.

One of the company follows the direction is OmniSci (formerly MapD). They have a live demo showing how fast GPU processes almost 400 million tweets and visualizes them geographically in less than 1 second (Check this out: http://scl2-04-gpu03.mapd.com:8003/ ). @szilard covers the benchmark that indicates significant speedup.

I was wondering whether this blitz performance of GPU databases was true. For that reason, I need to benchmark one of them. OmniSci was chosen because it currently has a cloud option (so I don’t have to buy GPU to try it out). I was so lucky getting an OmniSci cloud account (2 GB GPU RAM, 8 GB system RAM) due to education affiliation. I used 7 million rows 2008 flight data (source: https://lnkd.in/eATNq-M). The query test I did is to aggregate those data with sufficient cardinality.

Here is the result:

1) harddisk-based database (mysql): 13 sec (min)

2) gpu-based database (omnisci):

– fully execute on cloud: 40 msec (max)

– execute on cloud, retrieve the result on local machine (+transport time from US to NL): 8.5 sec (max)

– execute on local machine’s container (docker omnisci community ed.) using NVIDIA GTX 650Ti: 400 msec (max)